I keep making the same observation: we are not great at verbalizing our skills and knowledge. Not just about testers and testing, but particularly about testers and testing. Instead, we tend to behave as if testing carried more meaning of similar work and results than it does.

- Reproduce customer reported issues from customer acceptance testing, improving insufficient reports and running change advisory boards to prioritize the customer issues.

- Spend time conducting a technical investigation of the system and its features and changes, reporting discrepancies they are curious about.

- Maneuver builds between environments so that customer acceptance testing can do what they feel like doing on the version targeted for production.

- Reboot test environment on Wednesdays since by that time the low-end test environment has seen enough of week to be less performant.

As consultant, my CV (as well as my consultant colleagues CVs) are often looked at for selecting us for delivery projects. Needing to create the concept of Exceptional Consulting CV, I am in the process of experimentation of what works for me. Since my latest version of CV is labeled confidential, I won't go about sharing it. Instead, I share some thinking that I have been doing for it. And well, for annual performance reviews. The two reasons to wrap your skills and competences in a nice sales-oriented package.

Personal Skills

My first infographic is inspired by the re-emergence of frustration on how consulting CVs are often looked at for skills that are equivalent for "has painted with blue color" without considering transference of experiences between technologies, or combinations making application of a particular color particularly insight-driven from experiences. I have written python code. I have also written Java code. I choose to not list language specific application frameworks even though I have used some of them, because I am not making a case for hiring me as a developer. I want to focus on developing for purposes of test problems.

Roles

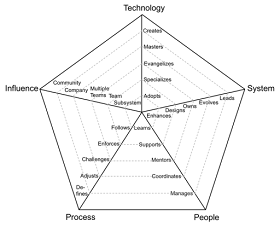

Past experiences mean little if the future I aspire isn't about repeating past experiences. The future I aspire is one of testing specialty. But alas, testing is not a single specialty. At minimum, it's three. And while I may be assigned the default role of Test Lead, I always prefer the role of Test Analyst and will intertwine that with whatever ideas people have about Test Automator. I call this mix Contemporary Exploratory Testing and my insistence on this mix leads me to another infographic, the idea of how you can your order of growing on main areas of skills depends on the role.

Test leads tend to be growing on the people, process and influence axes. Test analysts tend to grow on the system and process axes. Test automators tend to grow on technology and system axes. I have worked with people who can work, skills-wise, on full range of five dimensions, but they can't do it time wise. Full range is a team of people.

Multi-model Assessment Practice?

A fancy name, right? It basically says take as many models as you feel you benefit from. This was my selection for describing skills, today. I have another selection for test process improvement assessments, which I also do multi-model: with Quality Culture Transition Assessment (modern testing), TPI-model (testing), and Testomat Assessment model (test automation), spicing it usually with Context STAR model, and whatever complementary models I feel I could use based on my experience as assessor on observations I need to illustrate. I illustrate a lot of things. Like the things in this blog.

Create your own selection and use bits of what I have if that is useful. I care less for a universal model, and more for a model that helps me make sense of complexities I am dealing with.